Deferred Rendering Explained

This post should be called 'Deferred Rendering the Photostory'. There are manyarticles about thistechnique but they all make a lot of assumptions and usually end up with 'and then you render proxy geometry...'. Proxy what? Geometry where? Render how? Hence this post.

If figured out the ideas behind this code based on A 2.5D Culling for Forward+ presentation and by trying to decode ThreeJS DeferredRenderer.

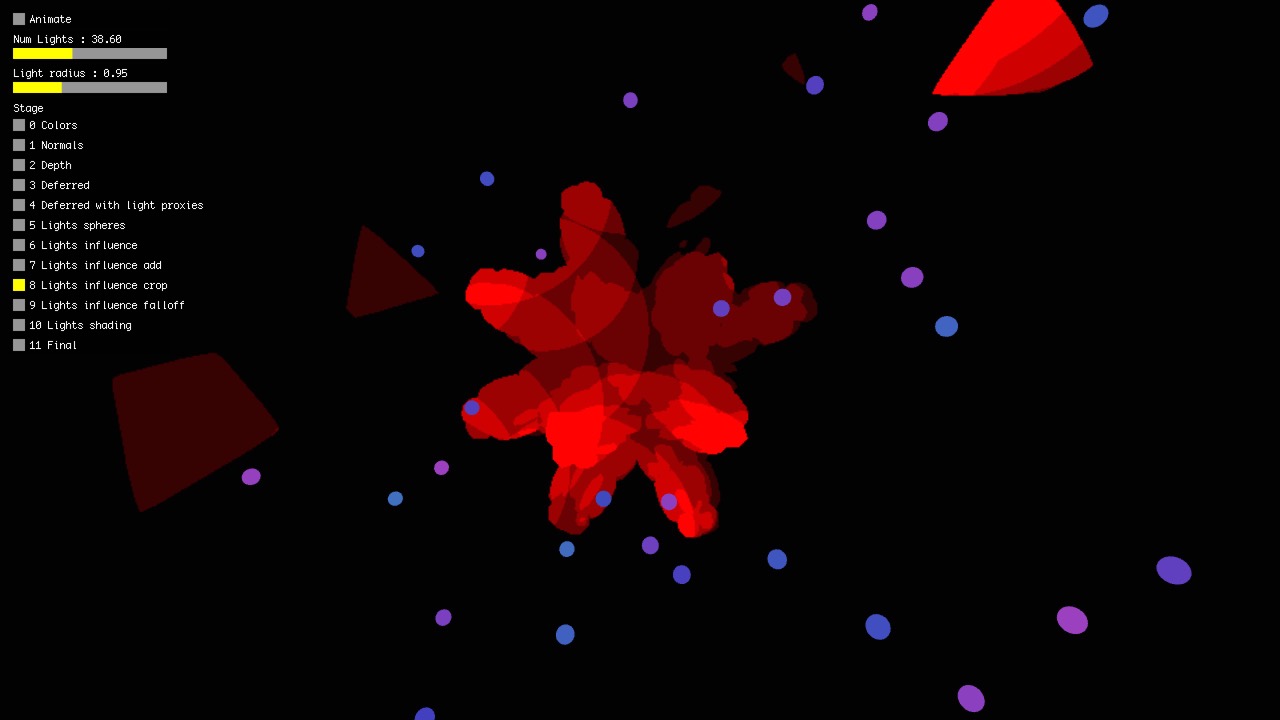

The motivation for deferred is that we want want to have a lot of lights and in order to achieve that we want to do as few duplicated calculations as possible. In order to do that we delay / defer computing of the lighting as much as possible and limit the calculations only to the area visible withing each light radius.

Note: This is quite high level description. It assumes that you know OpenGL / WebGL very well. For implementation details look at the code.

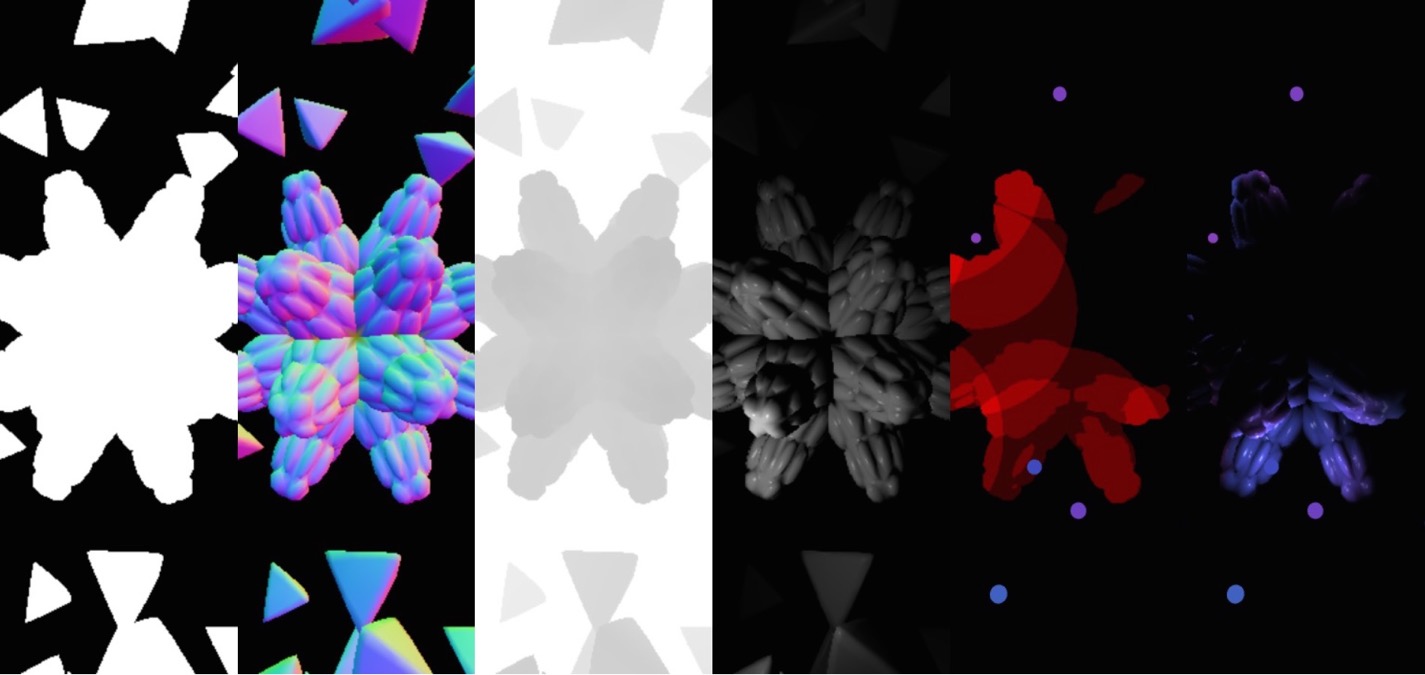

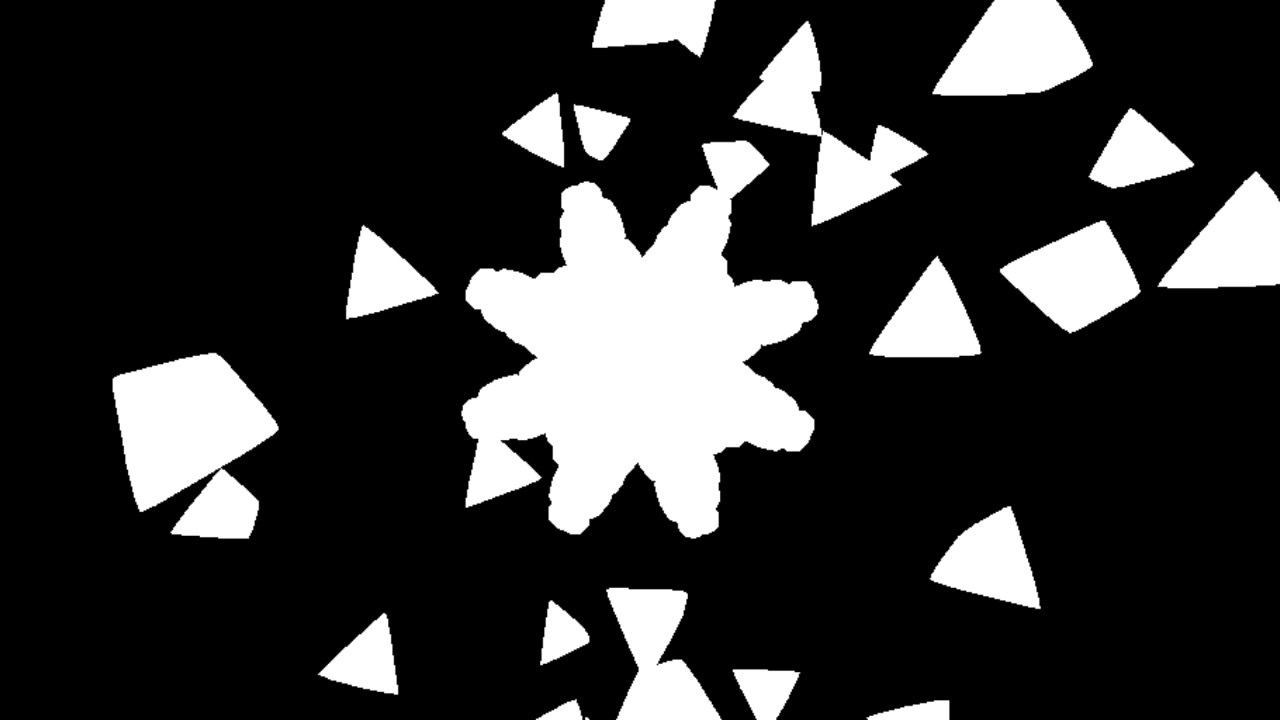

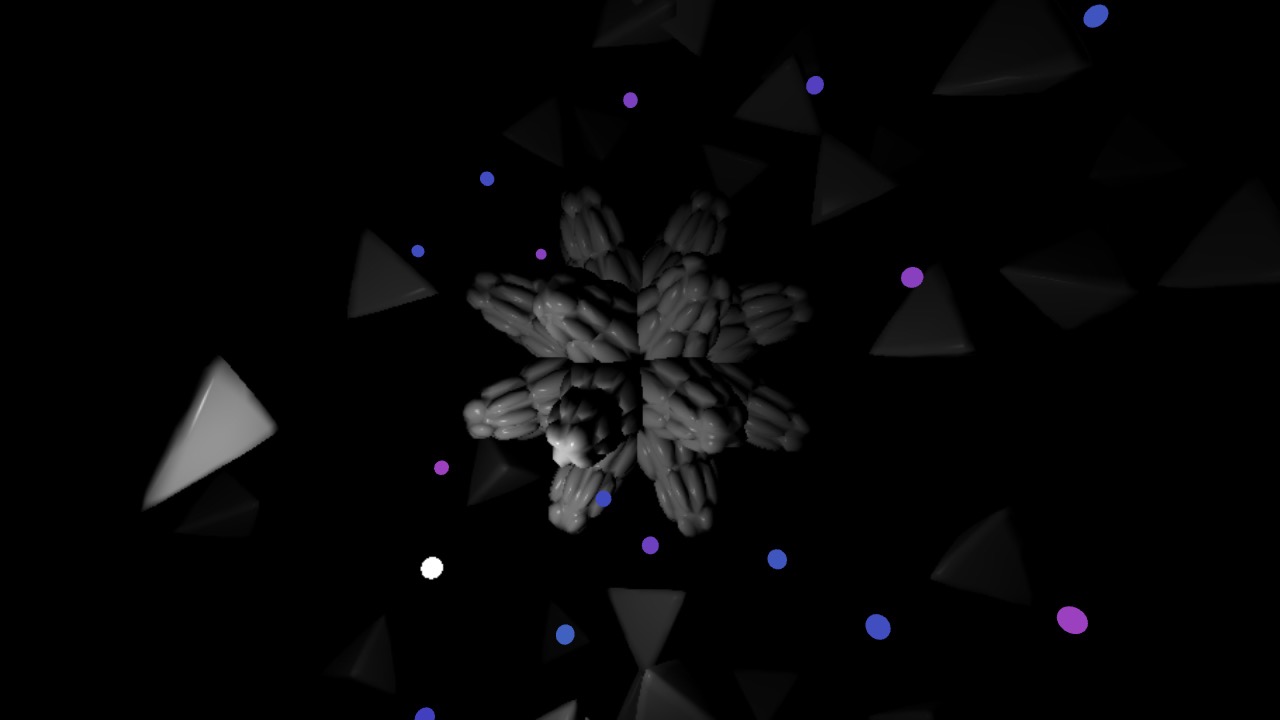

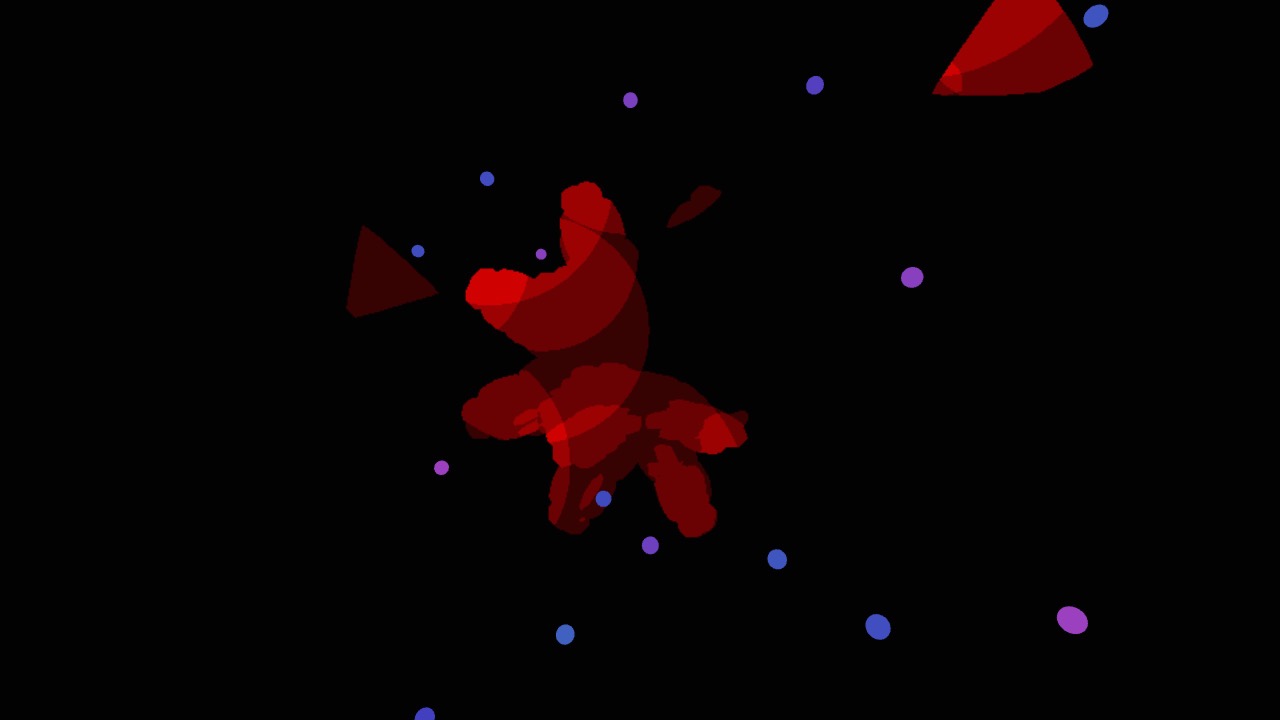

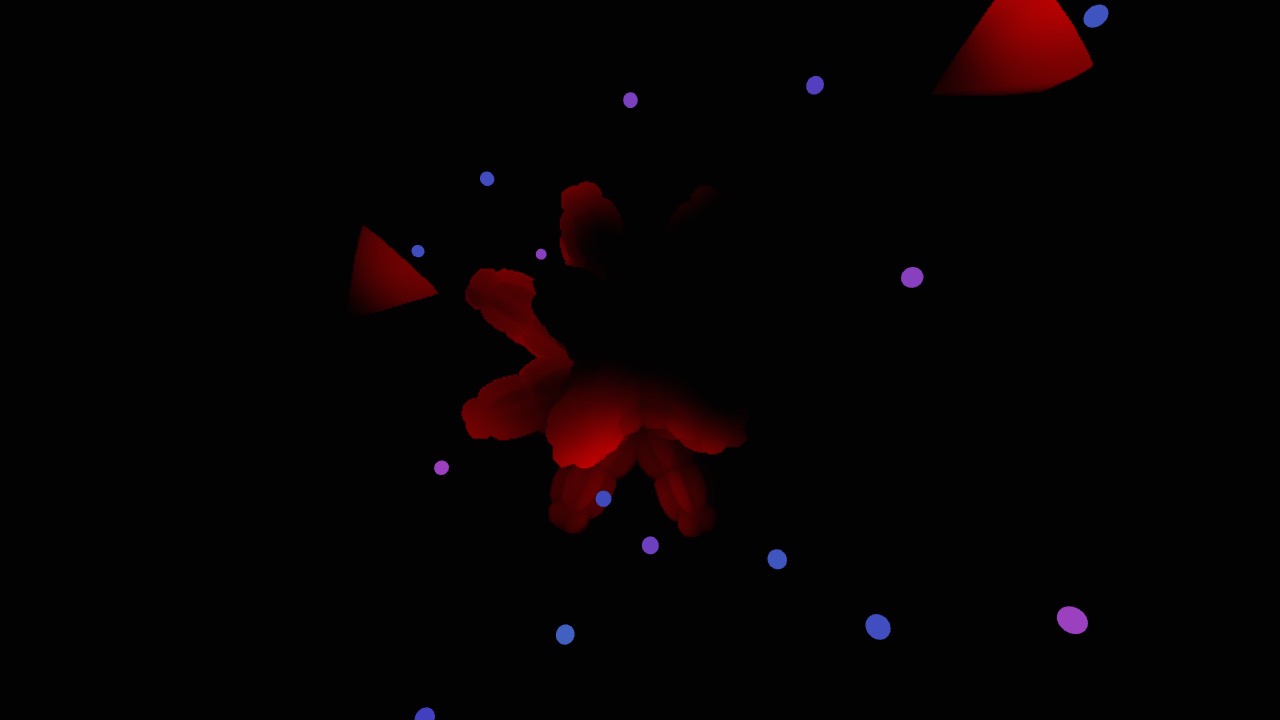

We start by rendering scene colors to an offscreen texture.

Here all the shapes are white because their material is just plain white color. I did that to better show how each light influences the shading.

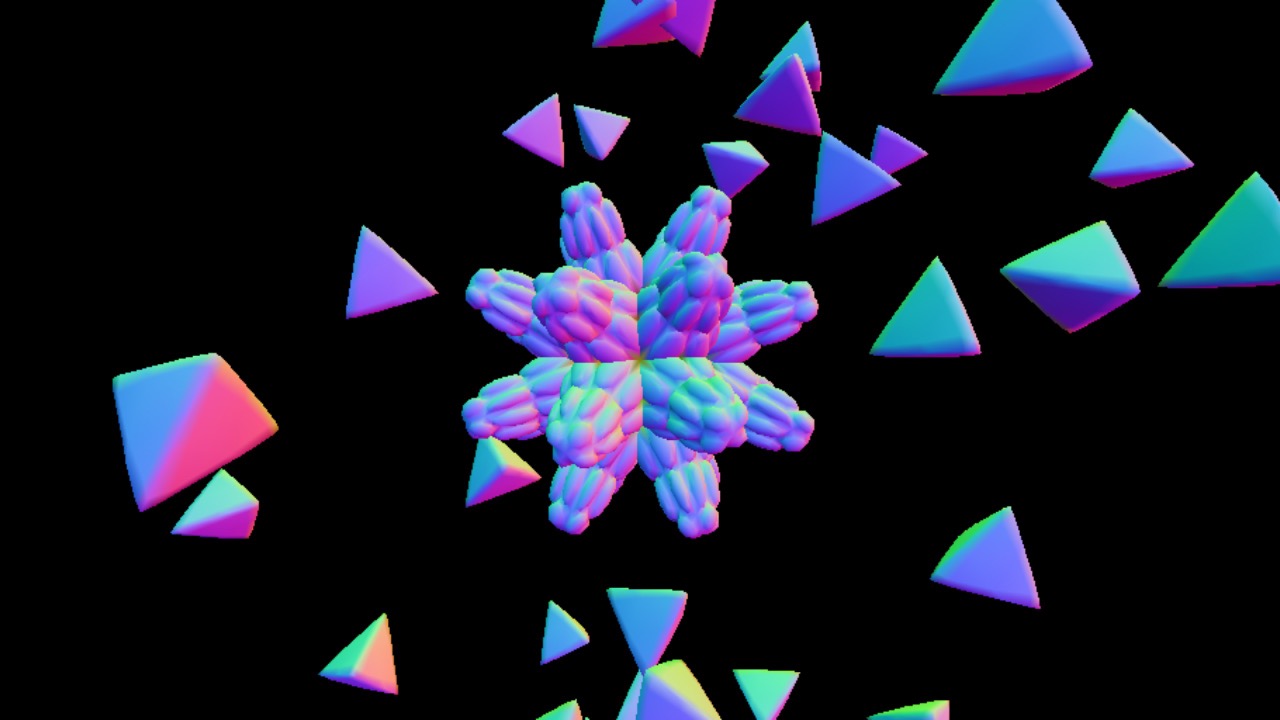

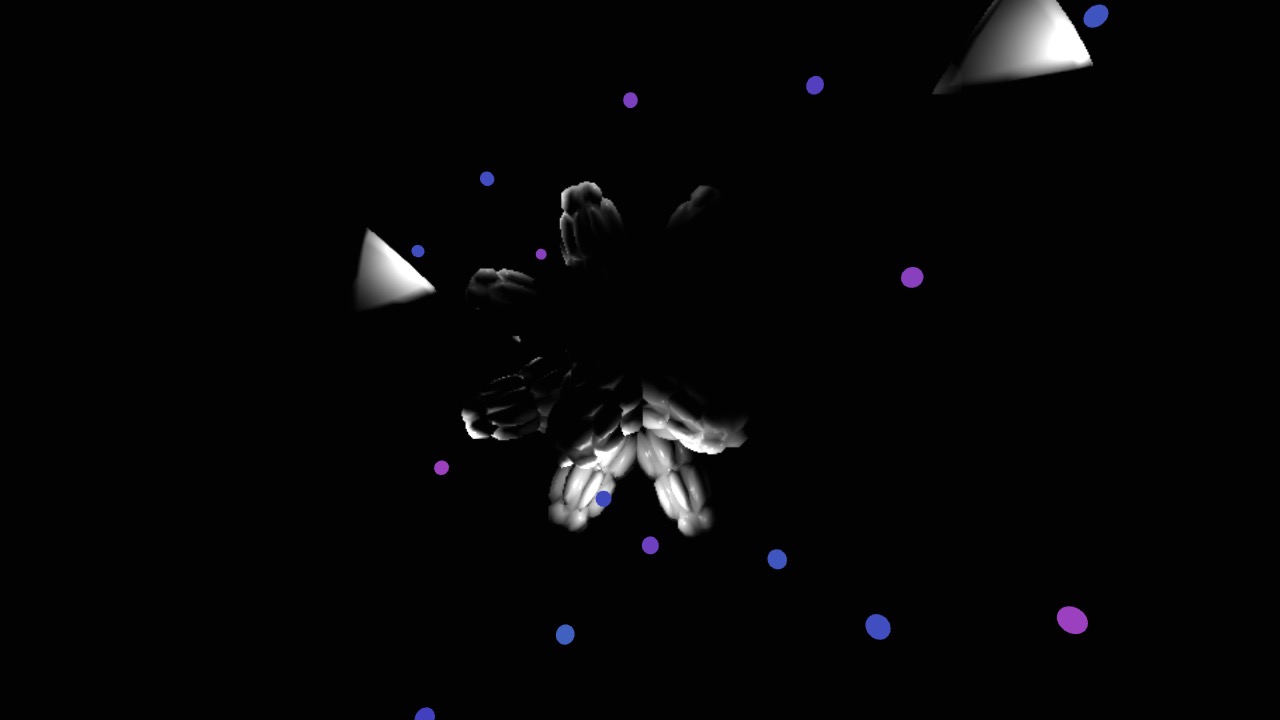

Then we render per pixel normals for the whole scene to another offscreen texture.

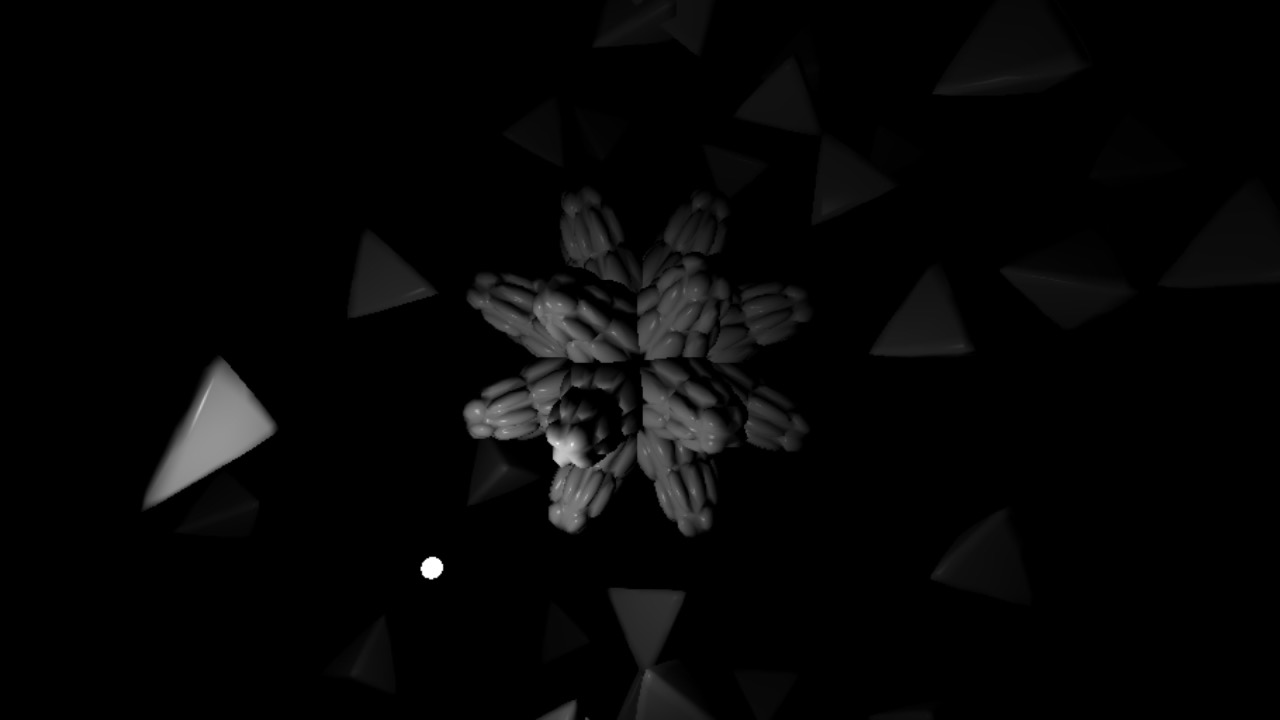

And depth.

Now if we draw a fullscreen quad, then for each pixel we can reconstruct camera space position based on the depth and calculate lighting based on the normal and the albedo color read from the offscreen textures and the current light.

But what if want multiple (hundreds) of lights? We can't draw 100s of fullscreen quads.

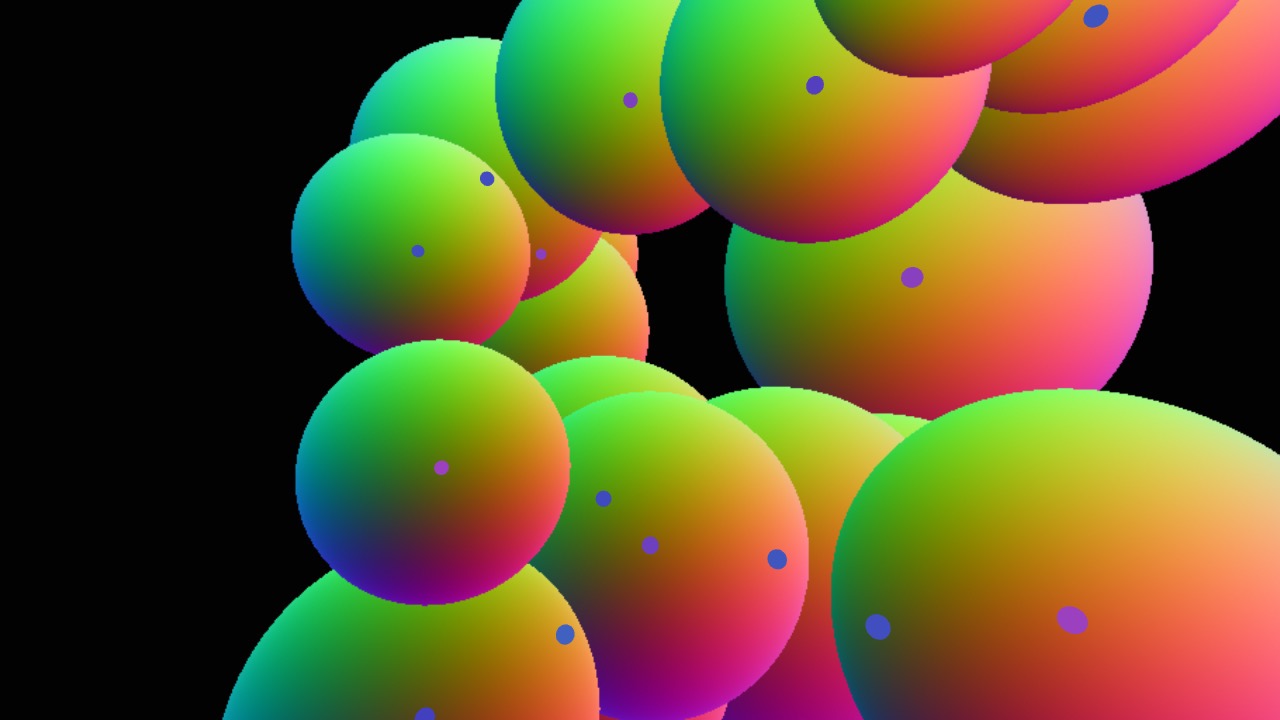

We solve that by drawing a sphere for each light. The radius of the sphere depends on light radius and it's falloff curve. At the edge of the sphere the light brightness should be reaching zero so calculating pixels outside of that radius doesn't contribute anything.

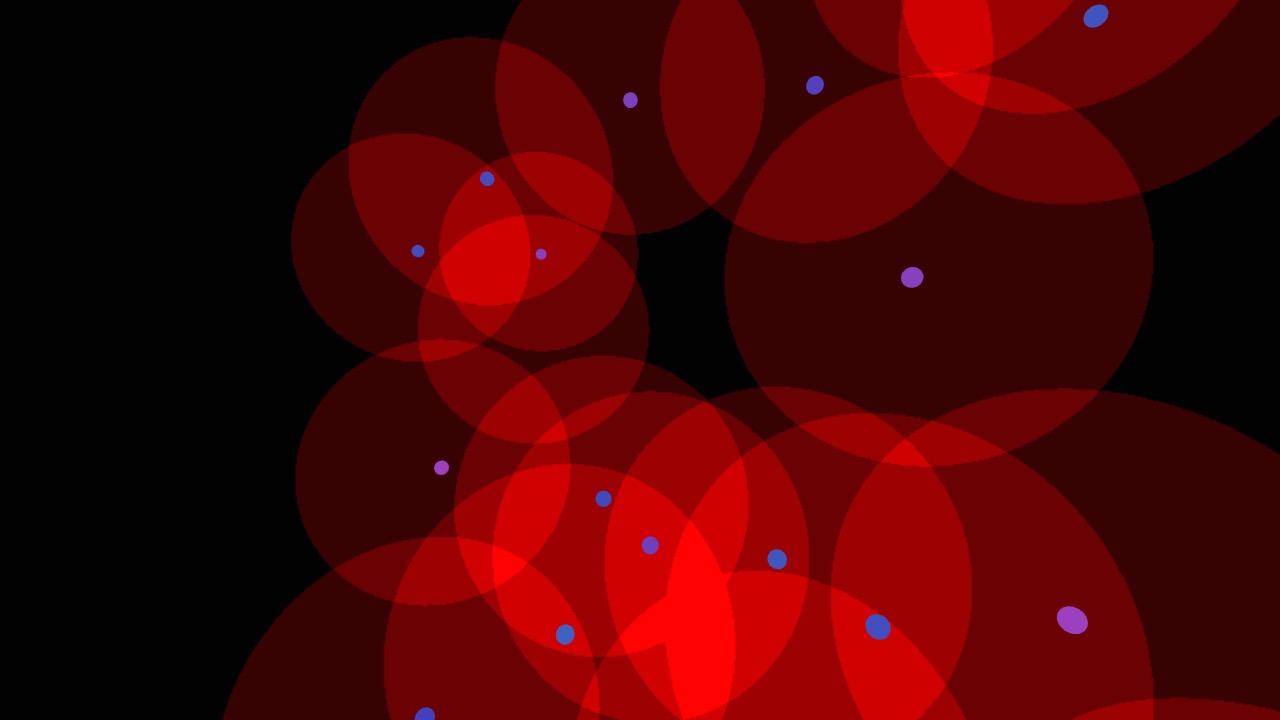

Here are the pixels covered by all the spheres.

I use red as a debug color to make it stand out.

Many spheres overlap. It means more than one light will contribute to that pixel. We can add all the values together using Additive Blending.

That's still a lot of pixels.

Now comes the trick.

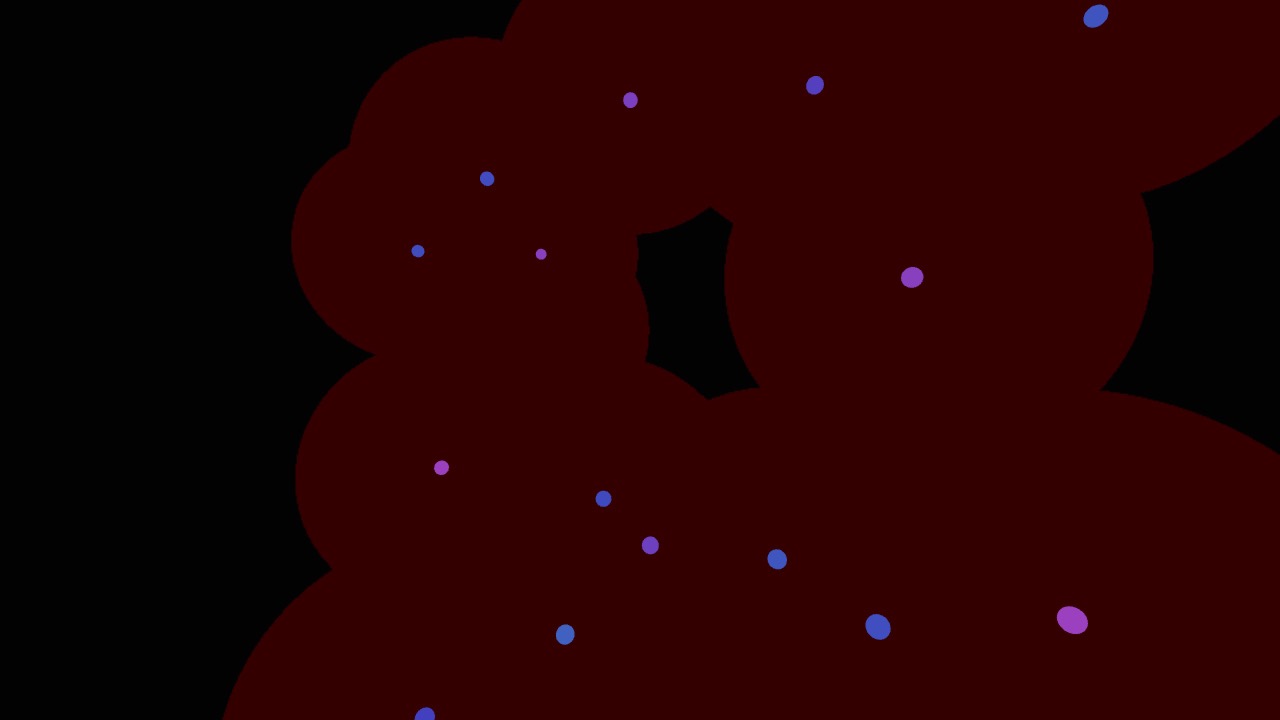

- draw only backfaces of the scene geometry

- draw each light proxy sphere with depth test set to GREATER

This will cause only pixels belonging to the scene objects AND being inside the sphere to be drawn which greately reduces total number of the pixels drawn.

We multiply that by light falloff.

We calculate the shading for those pixels.

And finally add light colors.

,,demo/

,,demo/

You are welcome to play with the live version.

Source code is on Github.

,,/experiments/deferred-rendering/demo/

,,/experiments/deferred-rendering/demo/

A more advanced example with tone mapping and gamma correction.

As a bonus below is video from Fibers that also uses similar technique but with more even advanced (physically based) lighting.